Learning molecular dynamics: GICnet

Molecules are always in motion, and this motion can be simulated with MD but it needs many steps to evaluate and is very slow. We can accelerate this procedure with machine learning potentials to calculate forces faster. However, machine learning potentials still do not solve the fundamental problem with dynamics: it is iterative, steps cannot be parallelized, quality depends on time step, trajectories are discrete and not smooth.

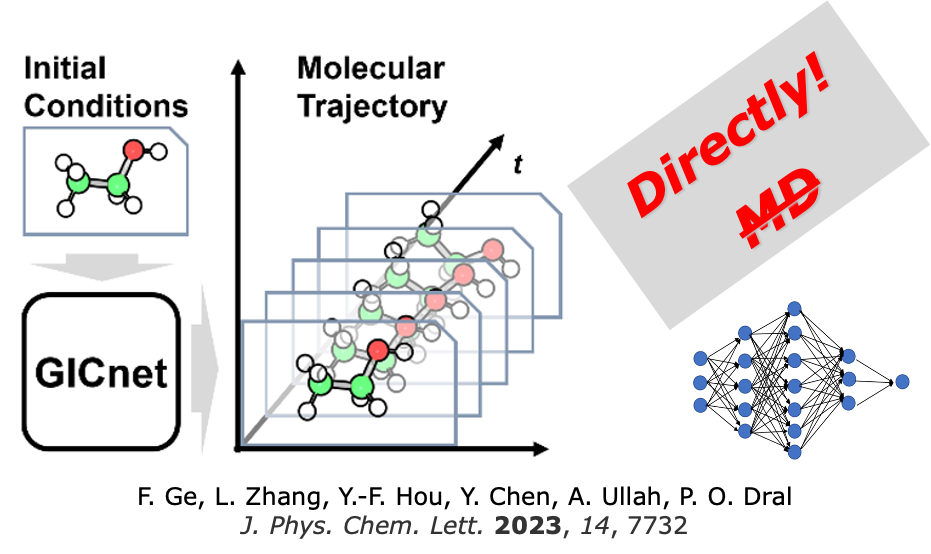

That’s why in March 2022, we introduced a novel concept of directly learning dynamics via 4D-spacetime atomistic AI models (4D models) for short. The idea is very simple but not easy to realize: we predict the nuclear coordinates as a continuous function of time! We can parallelize predictions at different time steps as they do not need to be calculated sequentially. The trajectories also can be obtained with arbitrarily high resolution.

GICnet

We managed to pull off a difficult task of creating a deep learning model to learn dynamics and predict the trajectories fast. This model is called GICnet and was published in JPCL in 2023. In effect, GICnet models are analytical representation of molecules and chemical reactions in 4D spacetime!

Amazingly, our model can learn dynamics of molecules of different complexity and even learns cis-trans isomerization dynamics of azobenzene. The trajectories can be obtained with GICnet orders of magnitude faster than with machine learning potentials.

See our paper for more details (please also cite it if you use GICnet):

Fuchun Ge, Lina Zhang, Yi-Fan Hou, Yuxinxin Chen, Arif Ullah, Pavlo O. Dral*. Four-dimensional-spacetime atomistic artificial intelligence models. J. Phys. Chem. Lett. 2023, 14, 7732–7743. DOI: 10.1021/acs.jpclett.3c01592.

Note

In this tutorial, we only talk about the Python API. For use with an input file/command-line, only a specific version of MLatom supports GICnet, which can be found in GitHub.

Now, let’s move on to the instructions of using GICnet inside MLatom.

Prerequisites

MLatom 3.8.0or latertensorflow v2.17.0(no guarantee for other versions)

Training GICnet models

Here we use ethanol molecule.

First we need to generate some trajectories as the training data. Check this tutorial for running MD in MLatom.

from mlatom.GICnet import GICnet

mol = ml.molecule.from_smiles_string('CCO')

molDB = ml.generate_initial_conditions(

molecule = mol,

generation_method = 'random',

initial_temperature = 300,

number_of_initial_conditions = 8

)

ani = ml.models.methods('ANI-1ccx',tree_node=False)

trajs = [

ml.md(

model=ani,

molecule=mol,

time_step=0.05,

ensemble='NVE',

maximum_propagation_time=10000,

).molecular_trajectory

]

Then, we can use these trajectories to train the GICnet model:

model = GICnet(

model_file='GICnet', # the name of the model file (it's a folder)

hyperparameters={

'ICidx': 'ethanol.ic', # a file that contains the definition of the internal coordinates for ethanol

'tc': 10.0, # the time cutoff

'batchSize3D': 1024, # the batch size for the built-in MLP model

'batchSize4D': 1024, # the batch size for the 4D model

'maxEpoch3D': 1024, # the maximum number of epochs of MLP model training

'maxEpoch4D': 1024, # the maximum number of epochs of 4D model training

}

)

model.train(trajs)

Since GICnet uses internal coordinates to describe the molecule, we need to provide the defintion of the coordinate system. More details can be found in our paper, here is an example for ethanol, for which the definition is saved in file ethanol.ic:

1 0 -1 -2

2 1 0 -1

3 1 2 0

4 1 2 3

5 1 2 3

6 2 1 3

7 2 1 3

8 2 1 3

9 3 1 2

Training status will be printed in stdout in real time:

... ...

----------------------------------------------------

epoch 5 1/1 t_epoch: 0.1

validate: 0.000650

best: 0.000650

----------------------------------------------------

epoch 6 1/1 t_epoch: 0.1

validate: 0.000599

best: 0.000599

----------------------------------------------------

... ...

----------------------------------------------------

epoch 1 1/1 t_epoch: 1.9

D A DA Ep/Ek

train: 0.045998 0.049149 0.036743 0.005203

d/dt 0.009045 0.009566 0.008752 0.000000

Loss 0.842420

validate: 0.031084 0.024374 0.019558 0.001658

d/dt 0.013125 0.010855 0.009795 0.000000

Loss 0.821911

best: 0.031084 0.024374 0.019558 0.001658

d/dt 0.013125 0.010855 0.009795 0.000000

Loss 0.821911

----------------------------------------------------

epoch 2 1/1 t_epoch: 2.0

D A DA Ep/Ek

train: 0.036901 0.049857 0.033790 0.005391

d/dt 0.008148 0.008873 0.008209 0.000000

Loss 0.781977

validate: 0.034426 0.029851 0.021169 0.003375

d/dt 0.012806 0.009719 0.007115 0.000000

Loss 0.752215

best: 0.034426 0.029851 0.021169 0.003375

d/dt 0.012806 0.009719 0.007115 0.000000

Loss 0.752215

----------------------------------------------------

... ...

Propagate trajectories with GICnet model(s)

Once you have a GICnet model, you can use it to propagate dynamics with lightning-fast speed.

from mlatom.GICnet import GICnet

mol=ml.molecule.from_smiles_string('CCO')

mol= ml.generate_initial_conditions(molecule = mol,

generation_method = 'random',

initial_temperature=300)[0]

model = GICnet(model_file='GICnet')

# options are similar to normal MD

dyn = model.propagate(molecule=mol,

maximum_propagation_time=20000, # fs

time_step=0.1 # fs

)

We highly recommend training multiple models and propagate the dynamics with their ensemble to improve the stability of the predictions:

model = GICnet(model_file=['GICnet_0',

'GICnet_1',

'GICnet_2',

'GICnet_3'])

dyn = model.propagate(molecule=mol,

maximum_propagation_time=20000,

time_step=0.1)