Overview

MLatom is a program package for AI-enhanced computational chemistry. It is designed to leverage the power of ML to speed up and make more accurate common simulations and to create complex workflows.

The users can run simulations with input files, Python script, and command-line options.

MLatom is an open-source package that can be installed via pip and cloned from GitHub. Additional advanced features can be obtained via the Aitomic add-ons. The calculations with MLatom can be performed on the Aitomistic Hub and XACS cloud computing service.

Computational chemists can calculate energies and thermochemical properties, optimize geometries of minima and transition states, run various types of molecular dynamics (including NVE, NVT, quasi-classical, surface-hopping trajectories), propagate quantum dynamics, perform diffusion Monte Carlo, and simulate (ro)vibrational (including IR and Raman), one-photon UV/vis absorption, and two-photon absorption spectra with ML, quantum mechanical, and combined models. The users can choose from an extensive library of methods containing universal ML models (our recommendation is UAIQM, but others like AIQM1, ANI-1ccx, ANI-1xnr, AIMNet2, DM21 are supported too); UAIQM, AIQM1, ANI-1ccx are approaching coupled-cluster accuracy at a fraction of cost of DFT. The developers can build their own models using various ML algorithms or use standard quantum mechanical methods (DFT, ab initio, and semi-empirical). Periodic boundary conditions are also supported to some extend. The great flexibility of MLatom is largely due to the extensive use of the interfaces to many state-of-the-art software packages and libraries.

A video overview of the MLatom capabilities:

To get quickly started, please check a simple example illustrating the use of MLatom.

Below we provide a detailed overview of capabilities of MLatom.

Text and figures of this overview are adapted from the paper on MLatom 3 in J. Chem. Theory Comput. 2024, DOI: 10.1021/acs.jctc.3c01203 (published under the CC-BY 4.0 license; see the original paper for more references and theory). More information about MLatom and its developers is on MLatom.com.

Diving into MLatom’s capabilities

MLatom merges the functionality from typical quantum chemical and other atomistic simulation packages with the capabilities of desperate ML packages, with a strong focus on molecular systems. The user can choose from a selection of ready-to-use QM and ML models and design and train ML models to perform the required simulations. The bird’s view of the MLatom capabilities is best given in the figure below:

MLatom as an open-source package can be readily installed via PyPI, i.e., simply using the command pip install mlatom or from the source code available on GitHub at https://github.com/dralgroup/mlatom (see installation instructions as often additional packages are required). To additionally facilitate access to AI-enhanced computational chemistry, MLatom can be conveniently used in the XACS cloud computing service at https://XACScloud.com whose basic functionality is free for noncommercial uses such as education and research. Cloud computing eliminates the need for program installation and might be particularly useful for users with limited computational resources but the choice of features might be limited by the availability of the third-party programs (some of which are commercial and cannot be installed on the cloud).

MLatom enables simulation tasks of interest for computational chemists with generic types of models that can be based on ML, QM, and their combinations. These tasks include single-point calculations, optimization of geometries of minima and transition states (which can be followed by intrinsic reaction coordinate (IRC) analysis), frequency and thermochemical property calculations, molecular and quantum dynamics, rovibrational spectra (infrared (IR) and power) spectra, ML-accelerated UV/vis absorption, and two-photon absorption spectra simulations. This part of MLatom is more similar to traditional QM and MM packages but with much more flexibility in model choice and unique tasks.

Importantly, MLatom also enables the users to create, use, and evaluate their own ML models. The MLatom supports a range of carefully selected representative ML algorithms that can learn the desired properties as a function of the 3D atomistic structure. Typically, these algorithms are used, but not limited to, for learning PESs and hence often can be called, for simplicity, ML (interatomic) potentials (MLPs). One particular specialization of MLatom is the original implementation of kernel ridge regression (KRR) algorithms for learning any property as a function of any user-provided input vectors or XYZ molecular coordinates. In addition, the user can create custom multicomponent models based on concepts of Δ-learning, hierarchical ML, and self-correction. These models may consist of the ML and QM methods. MLatom provides standardized means for training, hyperparameter optimization, and evaluation of the models so that switching from one model type to another may need just one keyword change. This allows one to easily experiment with different models and choose the most appropriate one for the task.

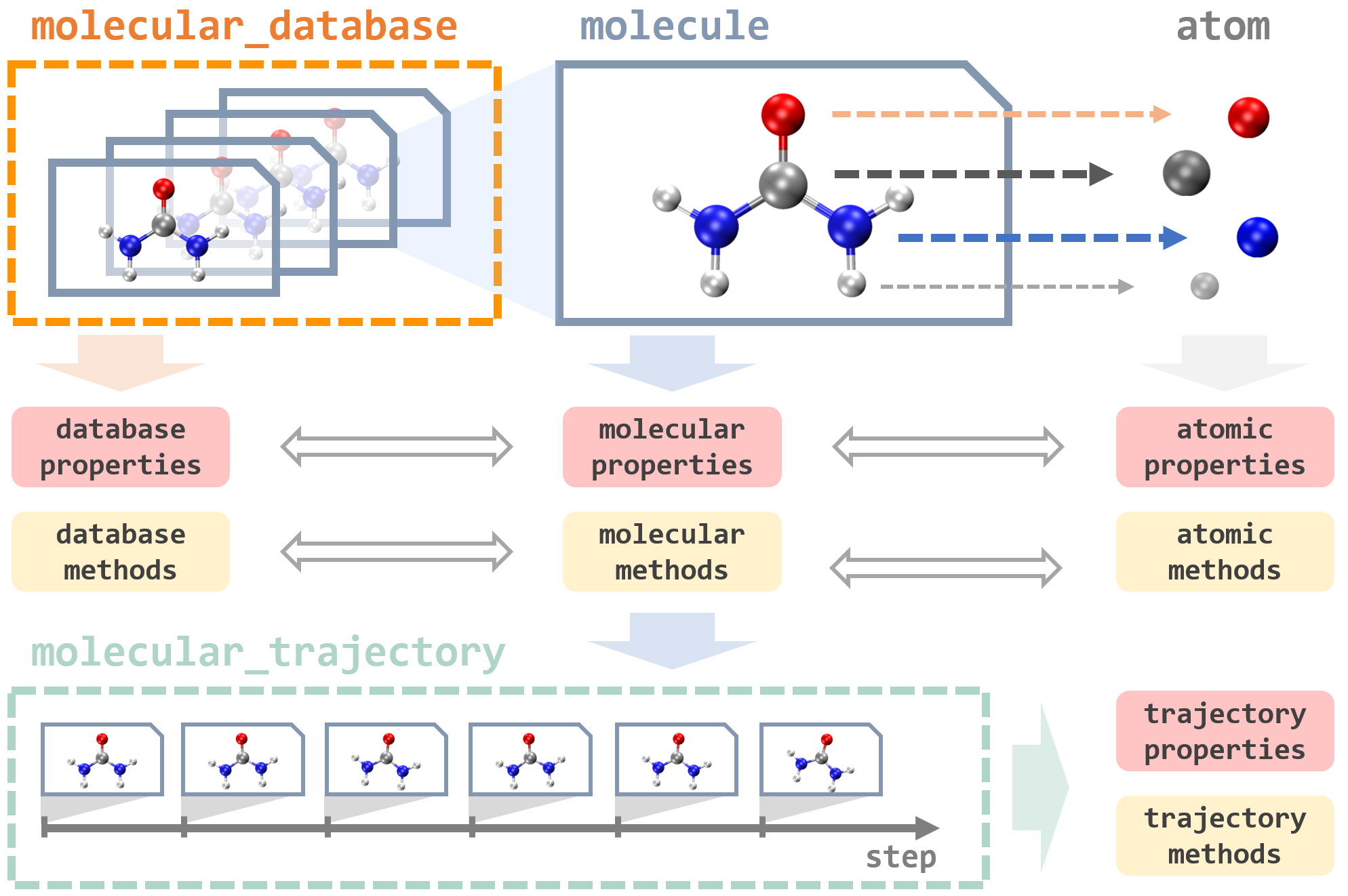

The data are as important as choosing and training the ML algorithms. MLatom provides several data structures specialized for computational chemistry needs, mainly based on versatile Python classes for atoms, molecules, molecular databases, and dynamics trajectories. These classes allow not just storing the data in a clearly structured format but also handling it by, e.g., converting to different molecular representations and data formats and splitting and sampling the data sets into the training, validation, and test subsets.

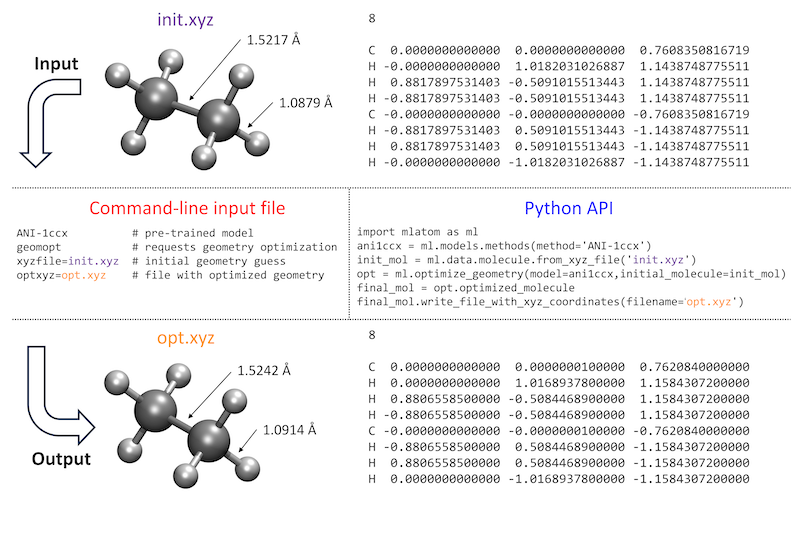

How the user interacts with the program is also important, and ideally, the features should be easily accessible and their use intuitive. MLatom calculations can be requested by providing command-line options either directly or through the input file. Alternatively, MLatom can be used as a Python module, which can be imported and used for creating calculation workflows of varying complexity. A side-by-side comparison of these two approaches is given in figure below for a common task of geometry optimization with one of the pretrained ML models ANI-1ccx:

You can directly try the same example by downloading the required file init.xyz or copy-pasting it from here:

8

C 0.0000000000000 0.0000000000000 0.7608350816719

H -0.0000000000000 1.0182031026887 1.1438748775511

H 0.8817897531403 -0.5091015513443 1.1438748775511

H -0.8817897531403 -0.5091015513443 1.1438748775511

C -0.0000000000000 -0.0000000000000 -0.7608350816719

H -0.8817897531403 0.5091015513443 -1.1438748775511

H 0.8817897531403 0.5091015513443 -1.1438748775511

H -0.0000000000000 -1.0182031026887 -1.1438748775511

If you choose to run the geometry optimization via command-line options or input file, you can download the input file geomopt.inp or copy-paste from below:

ANI-1ccx # pre-trained model

geomopt # requests geometry optimization

xyzfile=init.xyz # initial geometry guess

optxyz=opt.xyz # file with optimized geometry

Then run MLatom simulations using this input file as:

mlatom geomopt.inp &> geomopt.out

The program will print out the relevant calculation information to the output file geomopt.out.

If you choose to run the geometry optimization using PyAPI, here is the code snippet:

import mlatom as ml # import MLatom module.

ani = ml.models.methods(method='ANI-1ccx') # denfine an ANI-1cxx model.

init_mol = ml.data.molecule.from_xyz_file('init.xyz') # load the molecular structure.

final_mol = ml.optimize_geometry(model=ani, initial_molecule=init_mol).optimized_molecule # the optimized structure can be obtained by command.

final_mol.xyz_coordinates # we can see the coordinates of the last molecule.

print(final_mol.get_xyz_string()) # print the coordinates in ".xyz" format.

final_mol.write_file_with_xyz_coordinates(filename='opt.xyz') # save it in "opt.xyz".

More examples highlighting different use cases of MLatom are given in this documentation.

Data

In MLatom, everything revolves around operations on data: databases and data points of different types, such as an atom, molecule, molecular database, and molecular trajectory:

They are implemented as Python classes that contain many useful properties and provide different tools to load and dump these data-type objects using different formats. For example, the key type is a molecule that can be loaded from an XYZ file or SMILES and then automatically parsed into the constituent atom objects. Atom objects contain information about the nuclear charge and mass as well as nuclear coordinates. A molecule object is assigned with charge and multiplicity. Information about molecular and atomic properties can be passed to perform simulations, e.g., MD, with models that update and create new molecule objects with calculated quantum mechanical properties such as energies and energy gradients.

In our example of geometry optimization above, a molecule object init_mol was loaded from the file init.xyz, used as the initial guess for the geometry optimization, returning an optimized geometry as a new molecule object final_mol, which is saved into the opt.xyz file. Data objects can be directly accessed and manipulated via the MLatom Python API. When using the MLatom in the command-line mode, many similar operations are done under the hood so that the user often just needs to prepare input files in standard formats such as files with XYZ coordinates.

Molecule objects can be combined into or created by parsing the molecular database that has functions to split it into the different subsets needed for training and validation of ML models. The databases can be loaded and dumped in plain text (i.e., several files including XYZ coordinates, labels, and XYZ derivatives), JSON, and npz formats. Another data type is molecular trajectory, which consists of steps containing molecules and other information. Molecular trajectory objects are created during geometry optimization and MD simulations, and in the latter case, the step is a snapshot of MD trajectory, containing information about the time, nuclear coordinates and velocities, atomic numbers and masses, energy gradients, kinetic, potential, and total energies, and, if available, dipole moments and other properties. The trajectories can be loaded and dumped in JSON, H5MD, and plain text formats. Molecules for which XYZ coordinates are provided can be transformed in several supported descriptors: inverse internuclear distances and their version normalized relative to the equilibrium structure (RE), Coulomb matrix, and their variants.

MLatom also has separate statistics routines to calculate different error measures and perform other data analyses. Routines for preparing common types of plots, such as scatter plots and spectra, are available too.

Models and methods

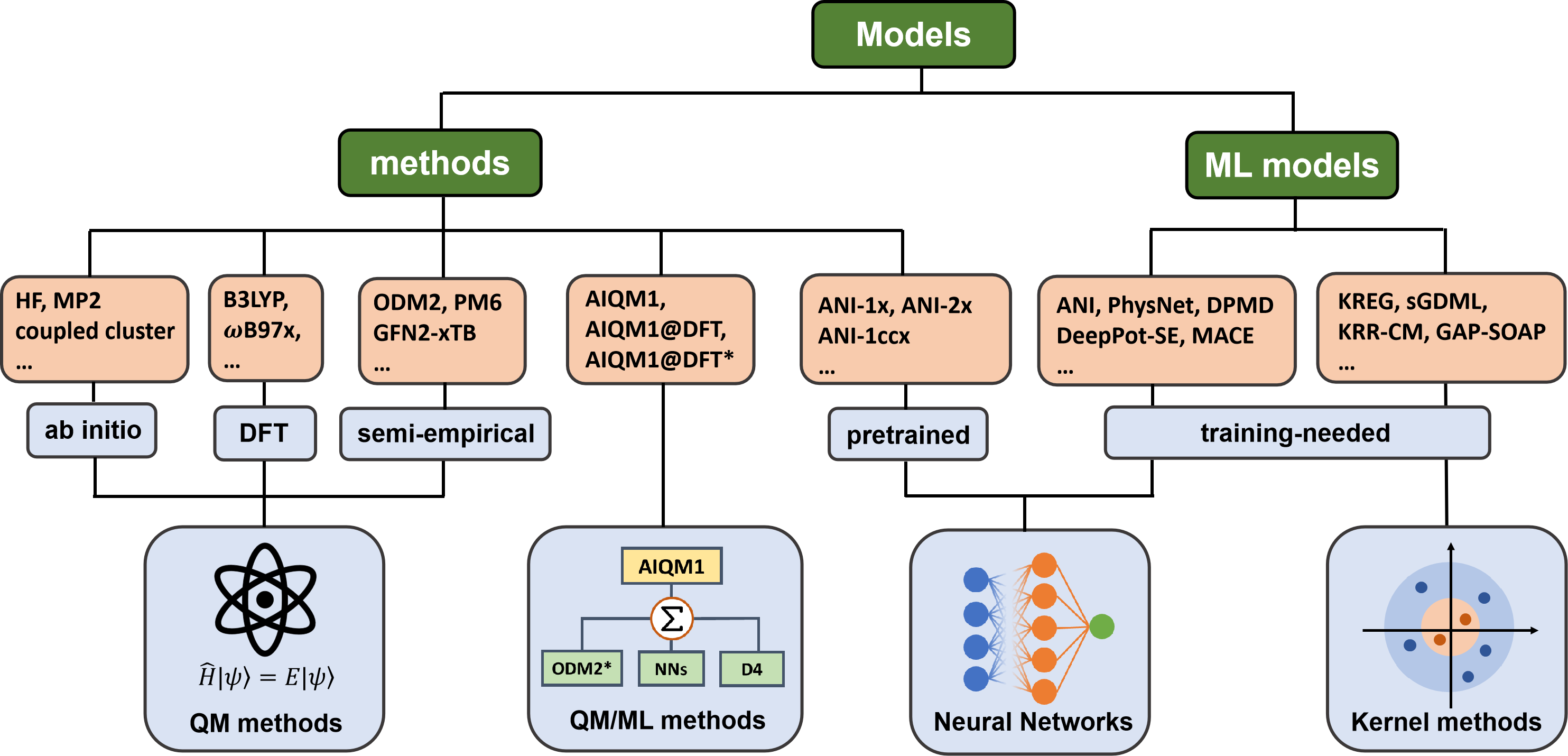

Any of the simulations need a model that provides the required output for a given input. The architecture and algorithms behind the models can be designed by an expert or chosen from the available selection. ML models typically require training to find their parameters before they can be used for simulations. Some of these models, such as universal MLPs of the ANI family, are already pretrained for the user who does not have to train them. This is similar to QM methods, which are commonly used out-of-the-box without tuning their parameters. In MLatom, we call a method any model that can be used out-of-the-box for simulations. Both pretrained ML models and QM methods belong to the methods in MLatom’s terminology, which is reflected in the keyword names. This model type also includes hybrid pretrained ML and QM methods. The overview of the models available in MLatom:

Overview of their implementations:

model type |

model name |

implementation |

|---|---|---|

Methods |

(models that can be used without training) |

|

QM methods |

ab initio methods, DFT |

|

semiempirical OMx, DFTB, NDDO-type methods |

||

semiempirical GFNx-TB methods |

interface to xtb |

|

CCSD(T)*/CBS |

interface to Orca |

|

QM/ML methods |

AIQM1, AIQM1@DFT, AIQM1@DFT* |

interfaces to MNDO and Sparrow for the ODM2* part, TorchANI for the NN part, dftd4 for D4 corrections |

pretrained ML models |

ANI-1x, ANI-2x, ANI-1ccx |

interface to TorchANI |

Models needing training |

||

neural networks |

||

interface to MACE |

||

ANI-type |

interface to TorchANI |

|

interface to DeePMD-kit |

||

interface to PhysNet |

||

kernel methods |

||

native implementation |

||

interface to sGDML |

||

KRR-CM (KRR with Coulomb matrix) |

native implementation |

|

interfaces to GAP suite and QUIP |

Methods

MLatom provides access to a broad range of methods through interfaces to many third-party, state-of-the-art software packages:

Pretrained ML models:

Universal potentials ANI-1ccx, ANI-1x, ANI-2x, ANI-1x-D4, and ANI-2x-D4. ANI-1ccx is the most accurate and approaches gold-standard CCSD(T) accuracy. We have seen an example of its use in geometry optimization in Figure 2. Other methods approach the density functional theory (DFT) level. ANI-1ccx and ANI-1x are limited to CHNO elements, while ANI-2x can be used for CHNOFClS elements. We allow the user to use D4 dispersion-corrected universal ANI potentials that might be useful for noncovalent complexes. D4 correction is taken for the ωB97X functional used to generate data for pretraining ANI-1x and ANI-2x. ANI models are provided via an interface to TorchANI and D4 corrections via the interface to dftd4. These methods are limited to predicting energies and forces for neutral closed-shell compounds in their ground state. MLatom reports uncertainties for calculations with these methods based on the standard deviation between neural network (NN) predictions.

The special ML-TPA model for predicting the two-photon absorption (TPA) cross sections.

Hybrid QM/ML methods AIQM1, AIQM1@DFT, and AIQM1@DFT* are more transferable and accurate than pretrained ML models but slower (the speed of semiempirical QM methods, which are still much faster than DFT). AIQM1 is approaching gold-standard CCSD(T) accuracy, while AIQM1@DFT and AIQM1@DFT* target the DFT accuracy for neutral, closed-shell molecules in their ground state. All these methods are limited to the CHNO elements. AIQM1 and AIQM1@DFT include explicit D4 dispersion corrections for the ωB97X functional, while AIQM1@DFT* does not. They also include modified ANI-type networks and the modified semiempirical QM method ODM2 (ODM2*, provided by either the MNDO or Sparrow program). These methods can also be used to calculate charged species, radicals, excited states, and other QM properties such as dipole moments, charges, oscillator strengths, and nonadiabatic couplings. MLatom reports uncertainties for calculations with these methods based on the standard deviation between NN predictions.

A range of established QM methods from ab initio (e.g., HF, MP2, coupled cluster, etc.) to DFT (e.g., B3LYP, ωB97X, etc.) via interfaces to PySCF, Gaussian and Orca.

A range of semiempirical QM methods (GFN2-xTB, OM2, ODM2, AM1, PM6, etc.) via interfaces to the xtb, MNDO, and Sparrow programs.

A special composite method CCSD(T)*/CBS extrapolating CCSD(T) to the complete basis set via an interface to Orca. This method is relatively fast and accurate. It allows the user to check the quality of calculations with other methods and generate robust reference data for ML. This method was used to generate the reference data for AIQM1 and ANI-1ccx.

Available Standard Models Needing Training

The field of MLPs is very rich in models. Hence, the user can often choose one of the popular MLP architectures reported in the literature rather than developing a new one. MLatom provides a toolset of MLPs from different types. These supported types can be categorized in a simplified scheme as follows:

Models based on neural networks (NNs) with fixed local descriptors to which ANI-type MLPs and DPMD belong and with learned local descriptors represented by PhysNet and DeepPot-SE. MLatom also supports a representative equivariant NN MACE, which shows superior performance for many tasks.

Models based on kernel methods (KMs) with global descriptors to which (p)KREG, sGDML, and KRR-CM belong as well as with local descriptors represented by only GAP-SOAP.

Any of these models can be trained and used for simulations, e.g., geometry optimizations or dynamics. MLatom also supports hyperparameter optimization with many algorithms including grid search, Bayesian optimization via the hyperopt package, and standard optimization algorithms available in SciPy. Generalization errors of the resulting models can also be evaluated in standard ways (hold-out and cross-validation).

Custom Models Based on Kernel Methods

MLatom also provides the flexibility of training custom models based on kernel ridge regression (KRR) for a given set of input vectors x or XYZ coordinates and any labels y. If XYZ coordinates are provided, they can be transformed in one of the several supported descriptors (e.g., inverse internuclear distances and their version normalized relative to the equilibrium structure (RE) and the Coulomb matrix). The user can choose from one of the implemented kernel functions, including the linear, Gaussian, exponential, Laplacian, and Matérn as well as periodic and decaying periodic functions, which are summarized in Table 2. These kernel functions \(k(\mathbf{x},\mathbf{x}_j;\mathbf{h})\) are key components required to solve the KRR problem of finding the regression coefficients \(\alpha\) of the approximating function \(f(\mathbf{x};\mathbf{h})\) of the input vector \(\mathbf{x}\):

Summary of the available kernel functions for solving the kernel ridge regression problem:

The kernel function, in most cases, has hyperparameters h to tune, and they can be viewed as measuring similarity between the input vector \(\mathbf{x}\) and all of the \(N_\text{tr}\) training points \(\mathbf{x}_j\) (both vectors should be of the same length \(N_x\)). In addition to the hyperparameters in the kernel function, all KRR models have at least one more regularization parameter, \(\lambda\), used during training to improve the generalizability.

Composite Models

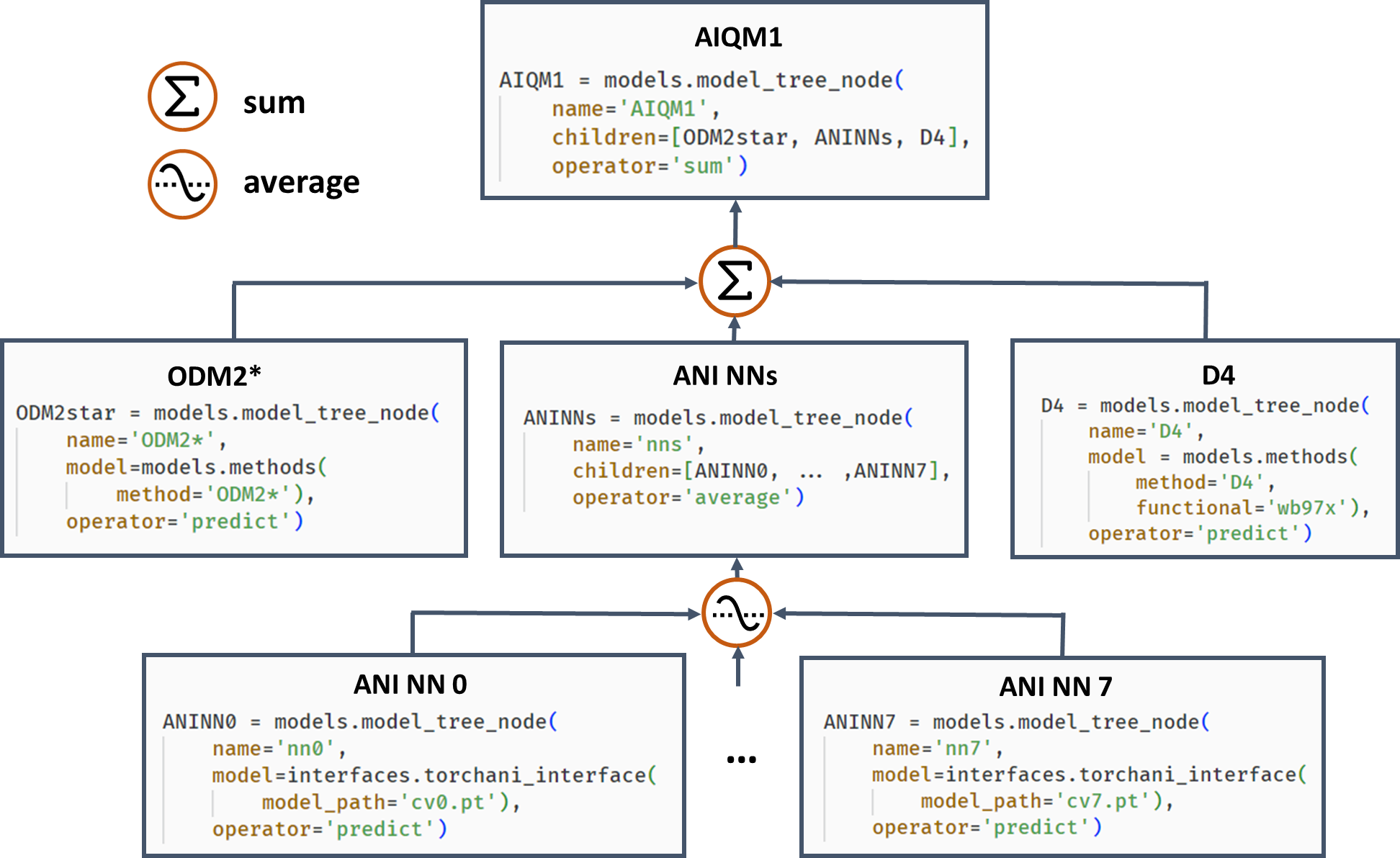

Often, it is beneficial to combine several models. One example of such composite models is based on Δ-learning where the low-level QM method is used as a baseline, which is corrected by an ML model to approach the accuracy of the target higher-level QM method. Another example is ensemble learning where multiple ML models are created, and their predictions are averaged during the simulations to obtain more robust results and use in the query-by-committee strategy of active learning. Both of these concepts can also be combined in more complex workflows as exemplified by the AIQM1 method, which uses the NN ensemble as a correcting Δ-learning model and the semiempirical QM method as the baseline. To easily implement these workflows, MLatom allows the construction of the composite models as model trees consisting of model_tree_node, see an example for AIQM1:

AIQM1’s root parent node comprises 3 children, the semiempirical QM method ODM2*, the NN ensemble, and additional D4 dispersion correction. The NN ensemble in turn is a parent of 8 ANI-type NN children. Predictions of parents are obtained by applying an operation “average” or “sum” to children’s predictions. The code snippets are shown, too.

Other examples of possible composite models are hierarchical ML, which combines several (correcting) ML models trained on (differences between) QM levels, and self-correction, when each next ML model corrects the prediction by the previous model.

Simulations

MLatom supports a range of simulation tasks such as single-point simulations, geometry optimizations, frequency and thermochemistry calculations, molecular and quantum dynamics, one- and two-photon absorption, and (ro)vibrational spectra simulations. Most of them need any model that can provide energies and energy derivatives (gradients and Hessians).

Single-point calculations

Single-point calculations are calculations of quantum mechanical properties─mainly energies and energy gradients, but also Hessians, charges, dipole moments, etc. for a single geometry. These calculations are very common in ML research in computational chemistry as they are used both to generate the reference data with QM methods for training and validating ML and to make inferences with ML to validate the trained model and generate required data for new geometries. MLatom is a convenient tool to perform single-point calculations not just for a single geometry, as in many QM packages, but for data sets with many geometries (see a dedicated tutorial).

Geometry optimizations

Locating stationary points on the PES, such as energy minima and transition states, is crucial for understanding the molecular structure and reactivity. Hence, geometry optimizations are among the most important and frequent tasks in computational chemistry. MLatom can locate energy minima and transition states (TS) with any model providing energies and gradients. An example of geometry optimization was given in the beginning, see also a dedicated tutorial. A practical application of MLatom for efficient and accurate geometry optimization was performed previously for rather large cycloparaphenylene (CPP) nanolassos and their complexes with fullerene molecules (systems with up to 200 atoms):

X-ray structure of the functionalized cycloparaphenylene (CPP) nanolasso superimposed with the structure optimized in vacuum at (a) AIQM1 and (b) ωB97X-D/def2-TZVP (both calculations can be performed with MLatom, see tutorial). Complexes of functionalized CPP and (c) C60 and (d) C70 with binding energies in kcal/mol calculated at AIQM1 in vacuum. The CPU time for these calculations is also reported.

The AIQM1 method can provide an optimized functionalized CPP structure, which has better agreement with the X-ray structure than that obtained from the DFT method at a speed 600 times faster than the DFT method. In our laboratories, we also use the AIQM1 method to optimize systems with more than a thousand of atoms on a single CPU, while for more computationally intensive tasks such as dynamics of large systems, one can use the pretrained ANI methods. Hessians are also required for the Berny TS optimization algorithm. Once the TS is located, the user can follow the intrinsic reaction coordinate (IRC) to check its nature.

Geometry optimizations can be performed with many algorithms provided by the interfaces to SciPy, ASE, or Gaussian. TS search can be performed with the dimer method in ASE and the Berny algorithm in Gaussian. IRC calculations can only be performed with the interface to Gaussian.

The seamless integration of the variety of QM and ML methods for performing geometry optimizations is advantageous because it allows the use of methods from interfaced programs that do not implement some of these simulation tasks by themselves. For example, MLatom can be used to perform TS search with the GFN2-xTB method via an interface to the xtb program, while there is no option for TS search with the latter program. Similarly, Sparrow, which provides access to many semiempirical methods, can only be used for single-point calculations. Since analytical gradients and Hessians are not available for many models and implementations, MLatom also implements a finite-difference numerical differentiation, further expanding the applicability of the models for geometry optimizations.

Frequency calculations

Simulation of vibrational frequencies is another common and important task in computational chemistry as it is useful to additionally verify the nature of stationary points, visualize molecular vibrations, calculate zero-point vibrational energy (ZPE) and thermochemical properties, and obtain spectroscopic information, which can be compared to experimental vibrational spectra. These calculations can be performed within the ridge-rotor harmonic approximation via an adapted TorchANI implementation, Gaussian interface and PySCF interface. Gaussian interface also allows the calculation of anharmonic frequencies using the second-order perturbative approach.

Similarly to geometry optimizations, MLatom can perform these simulations with any model─ML and QM or their combination that provides energies. Calculations also need Hessian, and wherever available, analytical Hessian is used. If it is unavailable, semianalytical (with analytical gradients) or fully numerical Hessian can be calculated.

Relative energy calculations

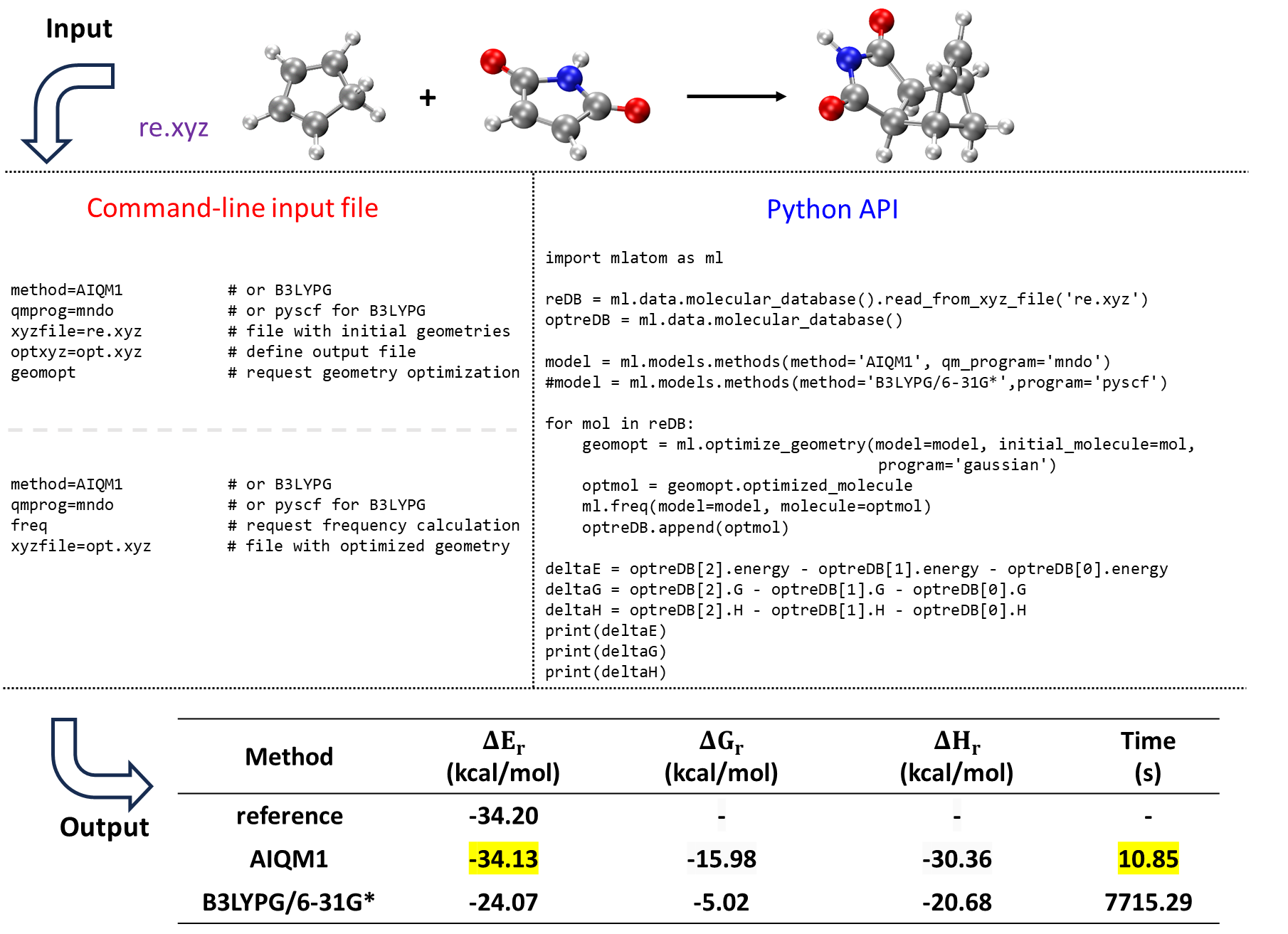

Relative energy is crucial for understanding and predicting various aspects of chemical behavior, from kinetics to thermodynamics, e.g., via calculating reaction energies, barrier heights, isomerization energies, and molecular stabilities. MLatom can produce various types of energies for molecules such as ZPE-exclusive and inclusive total energies, enthalpies, entropies, Gibbs free energies, and internal energies. Hence, the package can readily be used to evaluate different types of relative energies, e.g., the reaction enthalpies and Gibbs free energies as shown above for investigating which fullerene molecules bind stronger to the cycloparaphenylene nanolassos and for the Diels–Alder reaction of cyclopentadiene and maleimide:

In this example, calculations of ZPVE-exclusive energy, Gibbs free energy, and enthalpy changes in the Diels–Alder reaction of cyclopentadiene and maleimide forming the corresponding endo product with AIQM1 and B3LYPG/6-31G* (from the interface to PySCF; “G” in B3LYPG means that we use the B3LYP variant according to the Gaussian program convention). The reference reaction energy is from the GMTKN55 set.

We will illustrate that it is very convenient to do with the Python API (the same can be done manually through input file/command line calculations by performing thermochemical calculations for each molecule and get the changes in each type of energy using options shown in above figure):

import mlatom as ml

# read molecules from .xyz file

reDB = ml.data.molecular_database().read_from_xyz_file('re.xyz')

optreDB = ml.data.molecular_database()

# define method to calculate thermochemical properties

model = ml.models.methods(method='AIQM1', qm_program='mndo')

for mol in reDB:

# geometry optimization

geomopt = ml.optimize_geometry(model=model, initial_molecule=mol, program='gaussian')

optmol = geomopt.optimized_molecule

# frequency calculation

ml.thermochemistry(model=model, molecule=optmol)

optreDB.append(optmol)

# get relative energies

deltaE = optreDB[2].energy - optreDB[1].energy - optreDB[0].energy

deltaG = optreDB[2].G - optreDB[1].G - optreDB[0].G

deltaH = optreDB[2].H - optreDB[1].H - optreDB[0].H

print(deltaE)

print(deltaG)

print(deltaH)

The output should be the same as in the figure above.

Note

To unlock AIQM1’s full potential, we recommend to install the MNDO program for its QM part. The XACS cloud uses Sparrow instead for license reasons, which currently only provides numerical gradients limiting its applicability for geometry optimizations. Hence, you can try ANI-1ccx, e.g., directly on the XACS cloud, as it is often very good alternative (although it is limited to neutral closed-shell compounds in ground state).

Similarly, instead of Gaussian, on the XACS cloud, you can try to use ASE as an optimization engine.

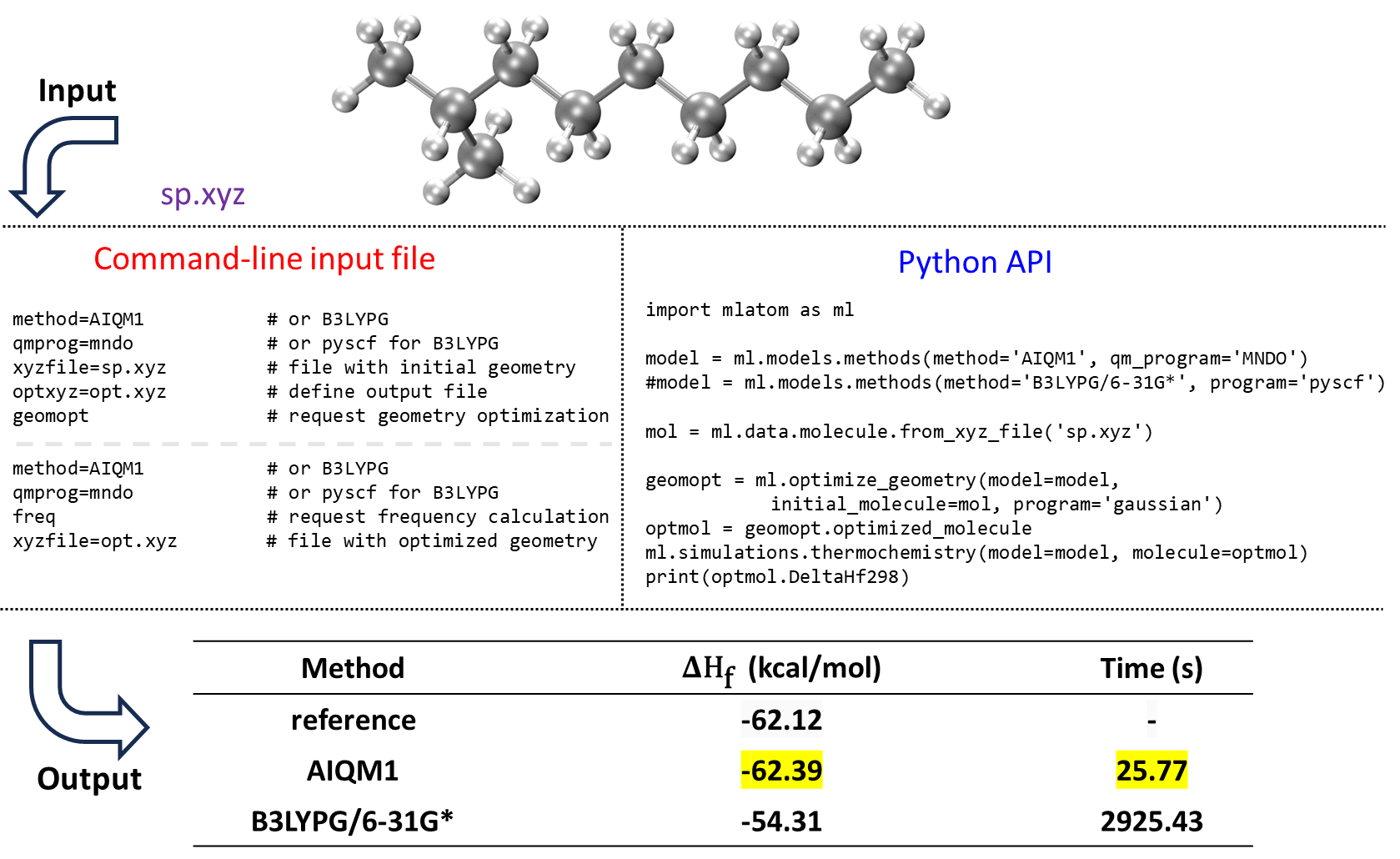

Calculation of heats of formation

The special type of relative energy calculation is evaluation of heats (enthalpies) of formation. MLatom uses the scheme analogous to those employed in the ab initio and semiempirical QM calculations to derive heats of formation:

where \(\Delta H_{\text{f},T}(A)]\) is the experimental enthalpies of formation of the free atom \(A\) and \(\Delta H_{\text{at},T}\) is the atomization enthalpy at temperature \(T\).

The scheme requires knowledge of the free atom energies E(A). Any model able to calculate them can be used for predicting heats of formation. This is straightforward for QM methods and also possible for ML models if the energies of isolated atoms were included in the training data. However, if the ML-based models are trained only on molecular species, as is commonly done, they cannot be expected to produce reasonable heats of formation. In the case of the pretrained models supported by MLatom, we have previously fitted free atom energies for AIQM1 and ANI-1ccx methods to reproduce experimental heats of formation for a set of common molecules because the NNs in these methods were not trained on an isolated atom. As a result, both methods can provide heats of formation close to chemical accuracy with speed orders of magnitude higher than those of alternative, high-accuracy QM methods. In addition, we provide an uncertainty quantification scheme based on the deviation of NN predictions in these methods to tell the users when the predictions are confident. This was useful to find errors in the experimental data set of heats of formation.

An example of using MLatom to calculate the heats of formation with the AIQM1 and B3LYP/6-31G* methods is shown for 2-methylnonane:

AIQM1 is both faster and more accurate than B3LYP, as can be seen by comparing the values with the experiment. This is also consistent with our previous benchmark.

Below is an example of input files for calculating heats of formation for a smaller ethanol molecule at ANI-1ccx:

freq # 1. requests frequency calculation

ANI-1ccx # 2. pre-trained model, the same as that used in geometry optimization

xyzfile=opt.xyz # 3. file with optimized geometry

The user can directly use the pre-optimized geometry output (opt.xyz) which we provide here:

9

C -1.691449880 -0.315985130 0.000000000

H -1.334777040 0.188413060 0.873651500

H -1.334777040 0.188413060 -0.873651500

H -2.761449880 -0.315971940 0.000000000

C -1.178134160 -1.767917280 0.000000000

H -1.534806620 -2.272315330 0.873651740

H -1.534807450 -2.272316160 -0.873650920

O 0.251865840 -1.767934180 -0.000001150

H 0.572301420 -2.672876720 0.000175020

After you created the input file, you can run MLatom, e.g., on XACS cloud, as:

mlatom freq.inp &> freq.out

The program output will be saved in file freq.out.

Alternatively, you can run the same simulation in the command line with the same options:

mlatom freq ANI-1ccx xyzfile=opt.xyz

You should be able to find in the MLatom output the vibration analysis and thermochemistry results. The vibration analysis prints out frequency, reduced mass and force constant of each normal mode. The thermochemistry part prints out zero-point energy, enthalpy, Gibbs free energy, heat of formation and other properties. For our example, the part of the output would look like:

==============================================================================

Vibration analysis for molecule 1

==============================================================================

Multiplicity: 1

Rotational symmetry number: 1

This is a nonlinear molecule

Mode Frequencies Reduced masses Force Constants

(cm^-1) (AMU) (mDyne/A)

1 261.0407 1.0929 0.0439

2 286.9474 1.1295 0.0548

3 428.0328 2.7260 0.2943

4 841.1644 1.0947 0.4564

5 928.2688 2.3346 1.1853

6 1082.6338 3.1779 2.1946

7 1138.6399 1.9583 1.4959

8 1212.5257 1.5518 1.3442

9 1287.5002 1.1134 1.0874

10 1292.8263 1.0682 1.0520

11 1426.7497 1.2307 1.4760

12 1457.4408 1.3203 1.6524

13 1496.9454 1.1496 1.5178

14 1501.2654 1.0458 1.3887

15 1524.8936 1.0529 1.4426

16 3063.7060 1.0530 5.8232

17 3076.9020 1.1120 6.2026

18 3093.2039 1.0342 5.8299

19 3201.4189 1.1000 6.6424

20 3213.8757 1.1004 6.6964

21 3741.4822 1.0681 8.8098

==============================================================================

Thermochemistry for molecule 1

==============================================================================

Standard deviation of NNs : 0.00063190 Hartree 0.39652 kcal/mol

Energy : -154.89195912 Hartree

ZPE-exclusive internal energy at 0 K: -154.89196 Hartree

Zero-point vibrational energy : 0.08101 Hartree

Internal energy at 0 K: -154.81095 Hartree

Enthalpy at 298 K: -154.80576 Hartree

Gibbs free energy at 298 K: -154.83631 Hartree

Atomization enthalpy at 0 K: 1.21200 Hartree 760.54458 kcal/mol

ZPE-exclusive atomization energy at 0 K: 1.29301 Hartree 811.37653 kcal/mol

Heat of formation at 298 K: -0.09030 Hartree -56.66187 kcal/mol

Molecular Dynamics

MLatom has a native implementation of molecular dynamics (MD) supporting any kind of model that provides forces, not necessarily conservative (see tutorial). Often, calculations based on QM methods are possible in variants called ab initio or Born–Oppenheimer MD (BOMD). The proliferation of ML potentials makes it possible to perform BOMD-quality dynamics at a cost comparable to molecular mechanics force fields or much faster than commonly used DFT-based BOMD, which allows routine simulations of large systems such as a quadruple assembly of octatetrayne-bridged ortho-perylene diimide dyads with ca. 400 atoms at ANI-1ccx:

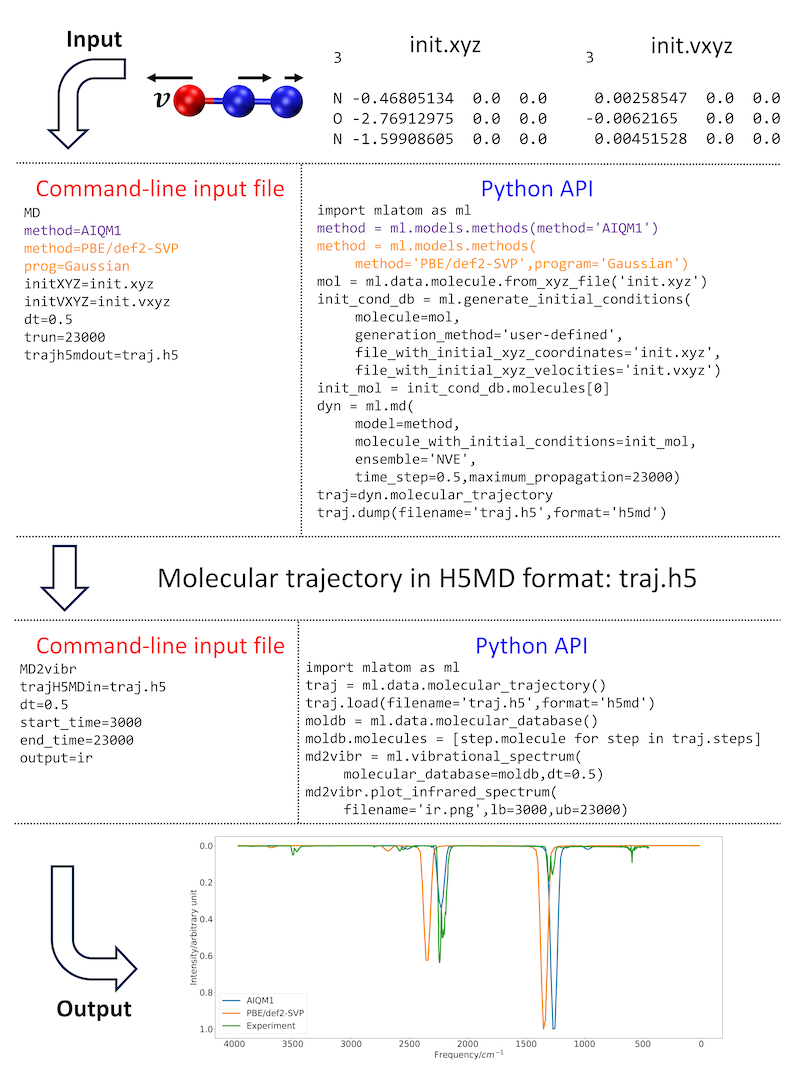

The accuracy of such simulations can be also high; for example, the IR spectra obtained from the MD with AIQM1 method are more accurate than those from a much slower DFT MD (see below).

Currently, simulations in NVE and NVT ensembles, based on the velocity Verlet algorithm, are possible. NVT simulations can be carried out with the Andersen and Nosé–Hoover thermostats, and the implementation of other thermostats is expected to be available in the future. Trajectories can be saved in different formats, including plain text, JSON, and more compact H5MD database formats. In addition, we made an effort to better integrate the KREG model implemented in Fortran into the main Python-based MLatom code, which makes MD with KREG very efficient.

The input file h2_md_kreg.inp shows how to run dynamics for the hydrogen molecule in the NVT ensemble using the Nosé–Hoover thermostat:

# h2_md_kreg.inp

MD # 1. requests molecular dynamics

initConditions=user-defined # 2. use user-defined initial conditions

initXYZ=h2_md_kreg_init.xyz # 3. file with initial geometry; Unit: Angstrom

initVXYZ=h2_md_kreg_init.vxyz # 4. file with initial velocity; Unit: Angstrom/fs

dt=0.3 # 5. time step; Unit: fs

trun=30 # 6. total time; Unit: fs

thermostat=Nose-Hoover # 7. use Nose-Hoover thermostat

ensemble=NVT # 8. NVT ensemble

temperature=300 # 9. Run MD at 300 Kelvin

MLmodelType=KREG # 10. KREG model is used

MLprog=MLatomF # 11. use KREG implemented in the Fortran part of MLatom

MLmodelIn=h2_kreg_energies.unf # 12. file with the trained model

For the tutorial, you can download the files with the initial XYZ coordinates h2_md_kreg_init.xyz, initial XYZ velocities h2_md_kreg_init.vxyz and model file h2_kreg_energies.unf.

The initial coordinates are defined in the XYZ format (Angstrom) as:

2

H 0.0 0.0 1.0

H 0.0 0.0 0.0

The initial velocities are also defined in a simplified XYZ format (Angstrom/fs):

2

0.0 0.0 -0.05

0.0 0.0 0.05

Run the MD simulation.

mlatom h2_md_kreg.inp &> h2_md_kreg.out

After the end of the MD, the trajectory will be saved in the files traj.xyz with the XYZ coordinates, traj.vxyz with XYZ velocities, traj.ekin, traj.epot, and traj.etot with kinetic, potential, and totla energies (Hartree), respectively, and traj.grad with energy gradients (Hartree/Angstrom).

Note that MD can also be propagated without forces using the concept of the 4D-spacetime AI atomistic models, which directly predict nuclear configurations as a function of time. Our realization of this concept, called the GICnet model, is currently available in a publicly available development version of MLatom.

The above implementations can propagate MD on an adiabatic potential energy surface, i.e., typically for ground-state dynamics. Nonadiabatic MD based on the trajectory surface hopping algorithms can also be performed with the help of MLatom, currently, via Newton-X’s interface to MLatom. MLatom also supports quantum dissipative dynamics, as described in the next section.

Quantum Dissipative Dynamics

It is often necessary and beneficial to treat the entire system quantum mechanically and also include the environmental effects. This is possible via many quantum dissipative dynamics (QD) algorithms, and an increasing number of ML techniques were suggested to accelerate such simulations. MLatom allows performing several unique ML-accelerated QD simulations (see tutorial) using either a recursive scheme based on KRR or a conceptually different AI-QD approach predicting the trajectories as a function of time or the OSTL technique outputting the entire trajectories in one shot. These approaches are enabled via an interface to a specialized program MLQD.

In the recursive KRR scheme, a KRR model is trained, establishing a map between the future and past dynamics. This KRR model, when provided with a brief snapshot of the current dynamics, can be leveraged to forecast future dynamics. In the AI-QD approach, a convolution neural network (CNN) model is trained mapping simulation parameters and time to the corresponding system’s state. Using the trained CNN model, the state of the system can be predicted at any time without the need to explicitly simulate the dynamics. Similarly, the ultrafast OSTL method utilizes a CNN-based architecture and, based on simulation parameters, predicts future dynamics of the system’s state up to a predefined time in a single shot. In addition, as optimization is a key component in training, users can optimize both KRR and CNN models using MLatom’s grid search functionality for KRR and Bayesian optimization via the hyperopt library for CNN. Moreover, we also incorporate the autoplotting functionality, where the predicted dynamics is plotted against the provided reference trajectory.

Rovibrational (infrared and power) spectra

Rovibrational spectra can be calculated in several ways with MLatom. The simplest method is by performing frequency calculations on an optimized molecular geometry (see above and tutorial). This requires any model providing Hessians and, preferably, dipole moments. Another one is performing molecular dynamics simulations with any model providing energy gradients and, then, postprocessing the trajectories (see manual).

Both frequency calculations and the MD-based approach require the model to also provide dipole moments to calculate the absorption intensities. If no dipole moments are provided, only frequencies are available, or, in the case of MD, only power spectra rather than IR can be obtained. The IR spectra are obtained via the fast Fourier transform using the autocorrelation function of dipole moment with our own implementation. The power spectra only need the fast Fourier transform, which is also implemented in MLatom.

We have previously shown that the high quality of the AIQM1 method results in rather accurate IR spectra obtained from MD simulations compared to spectra obtained with a representative DFT (which is also substantially slower) or a semiempirical QM method, see an example for the N2O molecule:

MLatom generates spectra for each method; here, the results are collated and shown together with the experimental spectrum for comparison.

One-photon UV/Vis absorption spectra

UV/vis absorption spectra simulations are computationally intensive because they require calculation of excited-state properties. In addition, better-quality spectra can be obtained via the nuclear ensemble approach (NEA), which necessitates the calculation of excited-state properties for thousands of geometries for high precision. MLatom implements an interpolation ML-NEA scheme that improves the precision of the spectra with a fraction of the computational cost of traditional NEA simulations (see tutorial):

The cross section predicted by ML-NEA shown on the right is compared to traditional QC-NEA and the single-point convolution approach (QC-SPC).

Currently, the ML-NEA calculations are based on interfaces to Newton-X and Gaussian and utilize the sampling of geometries from a harmonic Wigner distribution. This scheme also automatically determines the optimal number of required reference calculations, providing a user-friendly, black-box implementation of the algorithm.

Two-photon absorption

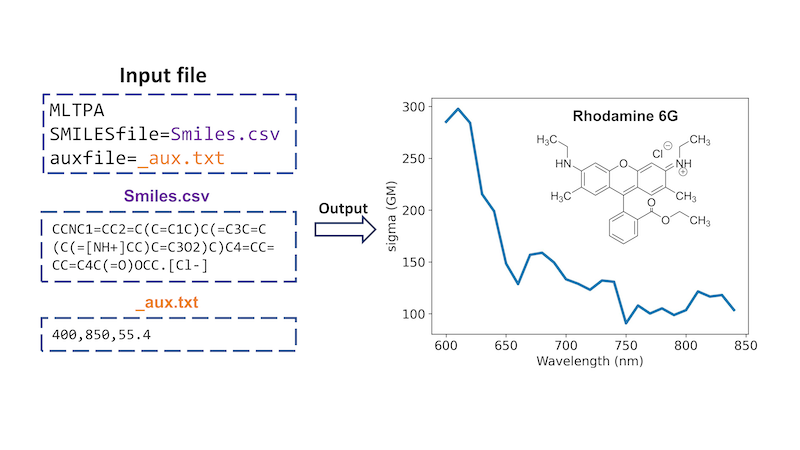

Beyond one-photon absorption, MLatom has an implementation of a unique ML approach for calculating two-photon absorption (TPA) cross sections of molecules just based on their SMILES strings, which are converted into the required descriptors using the interface to RDKit, and solvent information. This ML-TPA approach is very fast with accuracy comparable to that of much more computationally intensive QM methods. We provide an ML model pretrained on experimental data. ML-TPA was tested in real laboratory settings and was shown to provide a good estimate for new molecules not present in the training experimental database. An example of using ML-TPA to predict two-photon absorption (see manual):

Machine Learning

We discussed the supported types of models and how they can be applied to simulations. Here, we briefly overview the general considerations for training and validating the ML models with MLatom. The models share the standard MLatom’s conventions for input, output, training, hyperparameter optimization, and testing, which allows one to conveniently switch from one model to another and benchmark them.

Training

To create an ML model, the user has to choose and train the ML model and prepare data. MLatom provides many tools for the different stages of this process. The model can be either chosen from a selection of provided types of ML models with a predefined architecture or customized based on available algorithms and preset models. Once a model is chosen, it must be trained, and, in many cases, it is advisable or even required (particularly in the case of the kernel methods) to optimize its hyperparameters.

For training, the data set should be appropriately prepared. MLatom has strict naming conventions for data set splits to avoid any confusion when changing and comparing different model types. All of the data that are used directly or indirectly for creating an ML model are called the training set. This means that the validation set, which can be used for hyperparameter optimization or early stopping during NN training, is a subset of the training set. Thus, the part of the training set remaining after excluding the validation set is called the subtraining set and is actually used for training the model, i.e., optimizing model parameters (weights in NN terminology and regression coefficients in kernel method terminology).

MLatom can split the training data set into the subtraining and validation data subsets or create a collection of these subsets via cross-validation. The sampling into the subsets can be performed randomly or using furthest-point or structure-based sampling.

In the case of kernel methods, the final model in MLatom is typically trained on the entire training set after hyperparameter optimization. This is possible because the kernel methods have a closed analytical solution to finding their regression coefficients, and after hyperparameters are appropriately chosen, overfitting can be mitigated to a great extent. In the case of NNs, the final model is the one trained on the subtraining set because it would be too dangerous to train on the entire training set without any validation subset to check for the signs of overfitting.

Training predefined types of ML models

Most predefined types of ML models, such as ANI-type or KREG models, expect XYZ molecular coordinates as input. This should be either provided by the user or can be obtained using MLatom’s conversion routines, e.g., from the SMILES strings, which rely on OpenBabel’s Pybel API. These models have a default set of hyperparameters, but, especially in the case of kernel methods such as KREG, it is still strongly advised to optimize them. The models can be, in principle, trained on any molecular property. Most often, they are used to learn PESs and hence require energy labels in the training set. The PES model accuracy can be greatly improved if the energy gradients are also provided for training. Thus, the increased training time is usually justified.

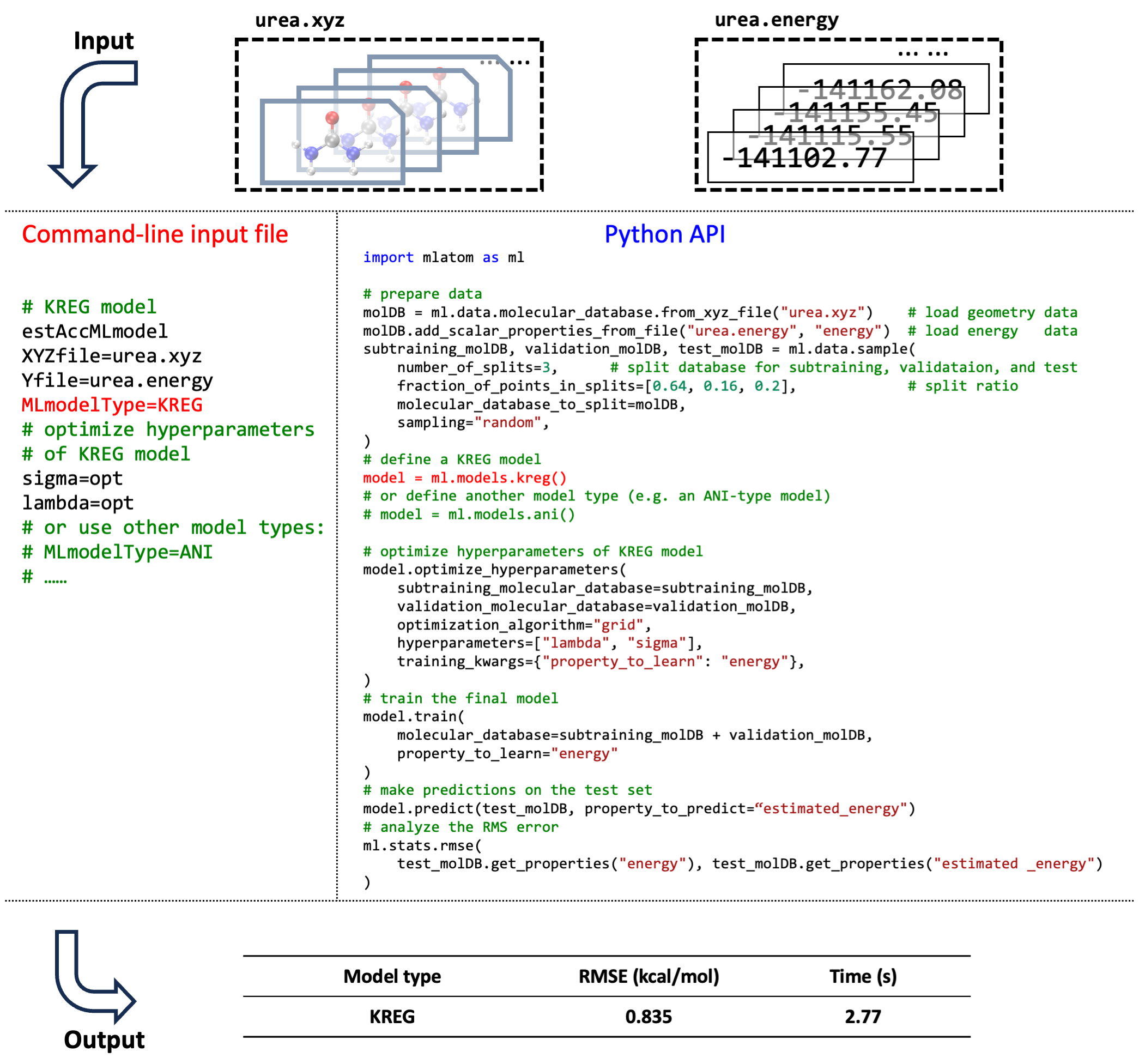

Side-by-side comparison of the usage of MLatom in both the command-line mode and via Python API for training and testing the KREG model on a 1000-point data set on the urea molecular PES data set randomly sampled from the WS22 database (see tutorial):

Hyperparameter optimization of the KREG model required is also shown. The KREG model is both fast to train and accurate (achieved an RMSE below 1 kcal/mol within a few seconds), which is a typical situation for small-size molecular databases, while for larger databases, NN-based models might be preferable. Command-line and Python script inputs for using a different type of ML model (e.g., ANI-type) are also shown in the figure as comments.

See also tutorial for MACE model.

Designing and training custom ML models

MLatom’s user can also create models on any set of input vectors and labels using a variety of KRR kernel functions. In this case, hyperparameter optimization is strongly advised too. In all other aspects, training of such KRR models is similar to training the predefined models, i.e., the preparation of the data set is also performed by splitting it into the required subsets for training and validation.

Importantly, the user can construct models of varying complexity using a model tree implementation. Special cases of such composite models are Δ-learning and self-correcting models, and they can be trained similarly to other ML models by supplying input vectors or XYZ coordinates and labels. In the case of Δ-learning, the user must supply the baseline values. For other more complicated models, the user must train and combine each component separately.

Hyperparameter optimization

The performance of ML models strongly depends on the chosen hyperparameters, such as the regularization parameters for training kernel methods and the number of layers in NNs. Hence, it is often necessary to optimize the hyperparameters to achieve reasonable results and to improve the accuracy. The hyperparameter optimization commonly requires multiple trainings, making it an expensive endeavor, and caution must be paid in balancing performance/cost issues.

MLatom can optimize hyperparameters by minimizing the validation loss using one of the many available algorithms. The validation loss is usually based on the error in the validation set, which can be a single hold-out validation set or a combined cross-validation error.

For a few hyperparameters, the robust grid search on the log or linear scale can be used to find optimal values. It is a common choice for kernel methods (see above example of optimizing hyperparameters of the KREG model, which is the kernel method). For a larger number of hyperparameters, other algorithms are recommended instead. Popular choices are Bayesian optimization with the tree-structured Parzen estimator (TPE) and many SciPy optimizers.

The choice of the validation loss also matters. In most cases, MLatom minimizes the root-mean-square error (RMSE) for the labeled data. However, when multiple labels are provided, i.e., energies and energy gradients for learning PES, the choice should be made on how to combine them in the validation loss. By default, MLatom calculates the geometric mean of the RMSEs for energies and gradients. The users can also choose a weighted sum of RMSEs, but in this case, they must choose the weight. In addition, the user can supply MLatom with any custom validation loss function, which can be arbitrarily complicated.

Evaluating models

Once the model has been trained, it is common to evaluate its generalization ability before deployment in production simulations. MLatom provides dedicated options for such evaluations. The simplest and one of the most widespread approaches is calculating the error for the independent hold-out test set not used in the training. To emphasize, in MLatom terminology, the test set has no overlap with the training set, which might consist of the subtraining and validation subsets. Alternatively, cross-validation and its variant leave-one-out cross-validation are recommended whenever computationally affordable, especially for small data sets. MLatom provides a broad range of error measures for the test set, including RMSE, mean absolute error (MAE), mean signed error, the Pearson correlation coefficient, the R2 value, outliers, etc. The testing can be performed with training and hyperparameter optimization for most models, including Δ-learning and self-correcting models.

Since the errors depend on the size of the training set, the learning curves showing this dependence are very useful for comparing different models. MLatom can generate the learning curves, which have been instrumental in preparing guidelines for choosing the ML interatomic potential.

See a dedicated (although a bit dated as no Python API use is shown) tutorial on how to benchmark different types of machine learning potentials.

Developing and contributing to MLatom

For developers, MLatom provides a flexible platform for implementation of the new interfaces as they just need to provide a new class supporting prediction (and optionally training) with the new model. For example, the implementation of MACE was done in one working day, and another working day was needed for testing. Once implemented, these models can be readily used for simulations.

The contributions to the main GitHub repository of MLatom (https://github.com/dralgroup/mlatom) are highly welcome and can be done via pull requests from branches (on request) and forks that the contributors may also create for their private developments of methods and features. The pull requests may be incorporated into official releases after the review and eventual adjustments by the main developers’ team managing the main GitHub repository.

Support and contact

If you have further questions, criticism, and suggestions, we would be happy to receive them in English or Chinese via email, Slack (preferred), or WeChat (please send an email to request to add you to the XACS user support group).